The use of artificial intelligence in the private sector is accelerating, and the financial authorities have no choice but to follow if they are to remain effective. Even when preferring prudence, their use of AI will probably grow by stealth.

|

|

The use of artificial intelligence in the private sector is accelerating, and the financial authorities have no choice but to follow if they are to remain effective. Even when preferring prudence, their use of AI will probably grow by stealth.

The financial authorities are rapidly expanding their use of artificial intelligence (AI) in financial regulation. They have no choice. Competitive pressures drive the rapid private sector expansion of AI, and the authorities must keep up if they are to remain effective.

The impact will mostly be positive. AI promises considerable benefits, such as the more efficient delivery of financial services at a lower cost. The authorities will be able to do their job better with less staff (Danielsson 2023).

Yet there are risks, particularly for financial stability (Danielsson and Uthemann 2023). The reason is that AI relies far more than humans on large amounts of data to learn from. It needs immutable objectives to follow and finds understanding strategic interactions and unknown unknowns difficult.

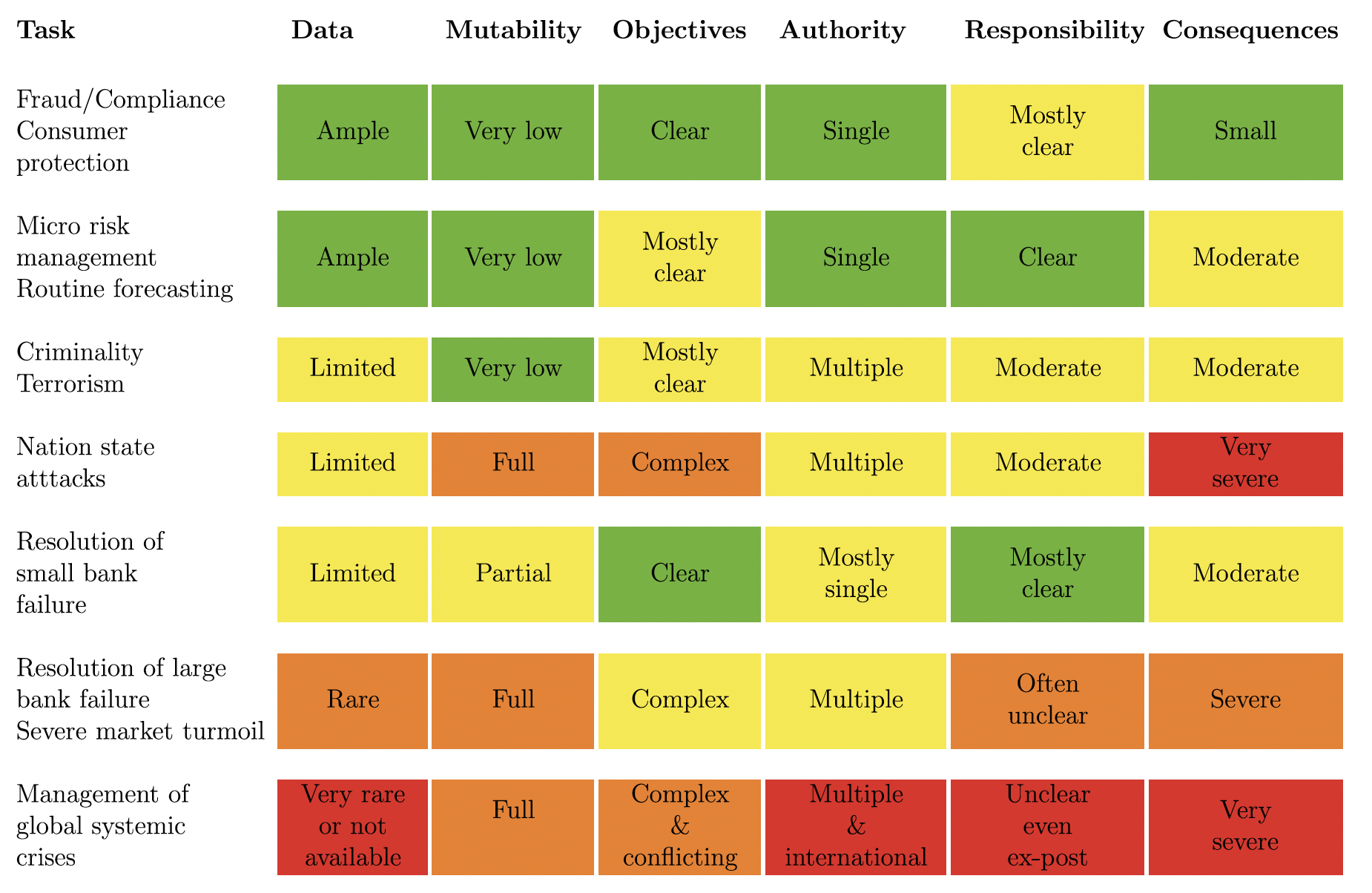

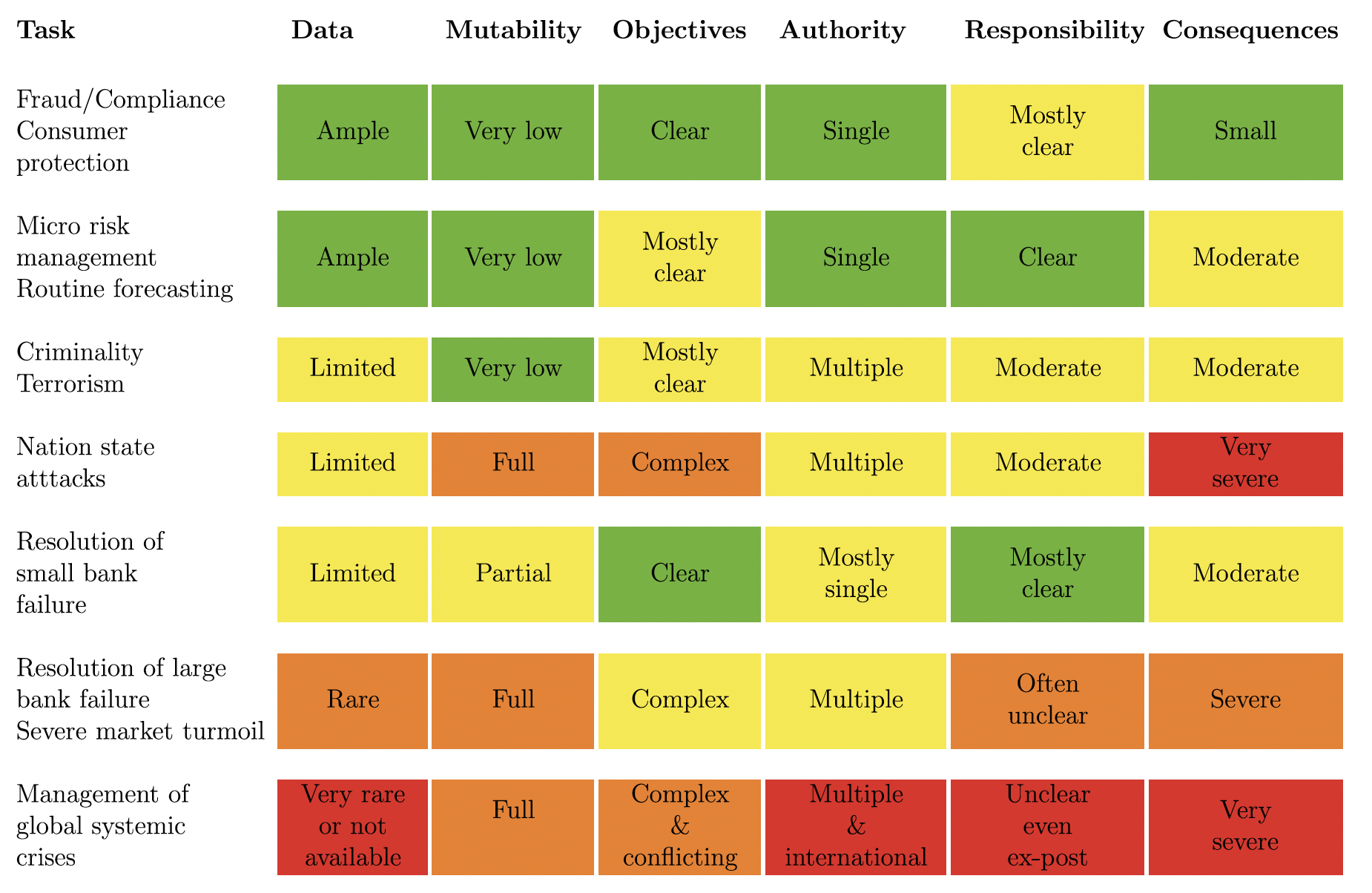

We propose six questions to ask when evaluating the use of AI for regulatory purposes:

Table 1 shows how the various objectives of regulation are affected by these criteria.

The financial authorities are rapidly expanding their use of artificial intelligence (AI) in financial regulation. They have no choice. Competitive pressures drive the rapid private sector expansion of AI, and the authorities must keep up if they are to remain effective.

The impact will mostly be positive. AI promises considerable benefits, such as the more efficient delivery of financial services at a lower cost. The authorities will be able to do their job better with less staff (Danielsson 2023).

Yet there are risks, particularly for financial stability (Danielsson and Uthemann 2023). The reason is that AI relies far more than humans on large amounts of data to learn from. It needs immutable objectives to follow and finds understanding strategic interactions and unknown unknowns difficult.

We propose six questions to ask when evaluating the use of AI for regulatory purposes:

Table 1 shows how the various objectives of regulation are affected by these criteria.

Financial crises are extremely costly. The most serious ones, classified as systemic, cost trillions of dollars. We will do everything possible to prevent them and lessen their impact if they occur, yet this is not a simple task....

more at CEPR